Latest News

Our faculty development and health professions education community is a dynamic space, always embracing new ideas and experimenting with innovative approaches. That’s why we’re excited to launch a new series dedicated to exploring the creative innovations and transformative projects shaping the future of health professions education. We aim to highlight new ideas and spark meaningful conversations to further growth in our community.

This month, we sat down with Alice Kam, Assistant Professor, Department of Medicine, Division of Physical Medicine and Rehabilitation, University of Toronto, who is focusing on exploring Generative AI integration in health professionals’ education with her Compassion-AI Project.

Could you please describe the GenAI-enabled OSCE bot and what it does?

The GenAI-enabled OSCE bot has two main portions. First, it acts as a standardized patient, giving learners the chance to practice hands-on interactions in a psychological safe space. Second, it leads a GenAI-enabled debrief, supporting learners’ reflective practice. After both steps, a GenAI analysis bot reviews the interaction and reflection, providing process and outcome based feedback on competencies, including reflection ability, using the CanMEDS competency framework. The overall goal is to support ‘reflection-on-action’ so learners can strengthen their continuing professional competence and growth through the learning experience.

What prompted this work?

This project was inspired by my clinical work as a physiatrist managing complex, chronic conditions. Over the years, I noticed that medical education often emphasizes the biomedical model while overlooking social determinants of health and contextual complex factors. That gap contributes to persistent challenges in patient-centered care. Because of this, I wanted to design a tool that integrates patients, caregivers, learners, educators, and researchers’ perspectives, while supporting reflective practice in a scalable, equitable, and compassionate way.

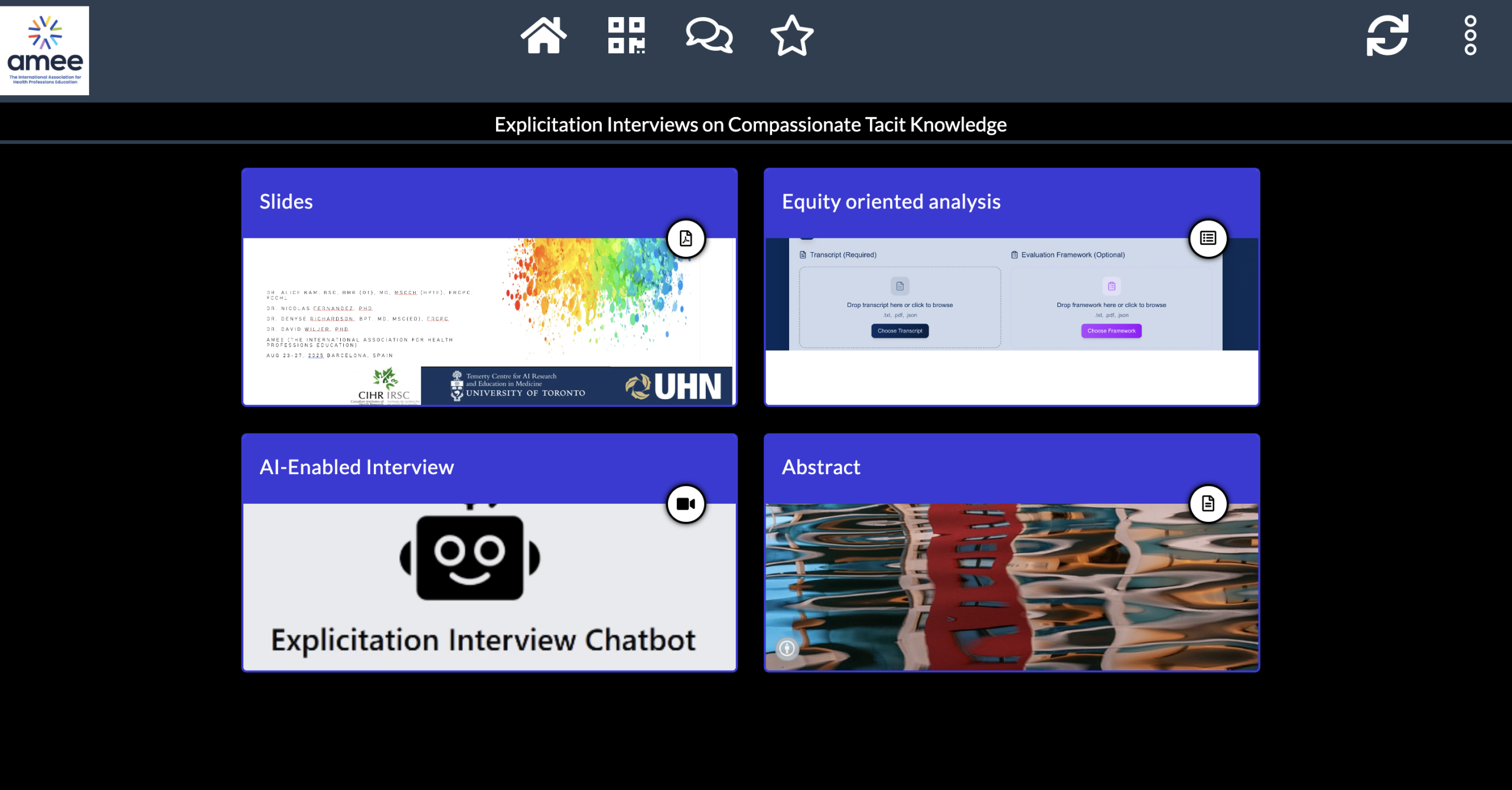

Reference: AMEE Learning Tool Box 2025 https://api.ltb.io/show/ACLCB

What was the development process like for this project?

The project evolved over a 5-year SPIRAL co-design process, progressing through three cycles. The first cycle focused on identifying knowledge gaps using multiple choice questions; the second involved collecting qualitative data through interviews; and the third addressed structural barriers such as sustainability and limited teaching resources. Across these cycles, it became clear that the central gap was the need for transformative agency change.

Of note, in addition to AI-enabled OSCE, we developed an equity oriented tool for researchers. Grounded in Cultural-Historical Activity Theory (CHAT) as a framework for equity-oriented research, this work ultimately led to the development of a CHAT-based AI application. The application enables researchers to compare their own analyses with AI-enabled analysis, helping to critically reflect on implicit biases.

Here is our manuscript of SPIRAL co-design and our compassionate care website for details:

- Kam ASH, Zhao G, Huang CL, et al. Residency spiral concussion curriculum design. Clin Teach. 2024;21(2):e13707. doi:10.1111/tct.13707

- Kam A, Lam T, Chang I, Huang RS, Fernandez N, Richardson D. Resident perceptions of learning challenges in concussion care education. Canadian Medical Education Journal. Published online 2023. doi:10.36834/cmej.78016

- Kam, A. (2025). Compassionate Care Map. Retrieved October 7, 2025, from https://www.compassionatehealthcare.ca/

- Kam A, Fernandez N, Richardson D and Wiljer D. Explicitation interviews on compassionate tacit knowledge — A pilot study contrasting AI & human processes. MedEdPublish 2025, 15:185 (slides) (doi: 10.21955/mep.1115840.1)

The technical process was straightforward. The real work was in conceptual design, co-designing rubrics around compassionate care, based on the co-action conceptualization framework. The rubrics could be embedded in the AI. Resources included CIHR funding, institutional support, and a multi-stakeholder co-design process with patients, caregivers, clinicians, and learners.

How do you navigate privacy concerns?

We use de-identified data throughout the process. Additionally, OSCE operating data in Generative AI (GPT) is not used for system improvement, which prevents risks of unintended data retention. The AI-enabled bot is hosted on UHN Azure, a secure platform that ensures all data are stored safely.

Transcripts are de-identified, and all data security measures comply with institutional privacy standards. This safeguards learners’ contributions and builds trust in the system. Future personal health information protection infrastructure will need to be built in place to support blurred faces or name removed function in data collection and data analysis stages.

What processes are required to get approval as an evaluation tool?

The approval pathway has several steps:

- REB approval to ensure ethical compliance.

- Institutional approval from the research institute for structural and technical support.

- Validation studies to establish the tool’s accuracy and learning effectiveness.

How can our audience contact you or interact with your project?

You’re welcome to connect with me by email: Alice.kam@uhn.ca! I also share updates through my institutional project page: https://kite-uhn.com/scientist/alice-kam. We invite educators, learners, and patients to join our co-design sessions and pilot testing as we refine and scale the tool.

Want your project to be featured next? Email us [cfd@unityhealth.to] for more details!

See all Latest News.